Understanding why bots crawl your site and your robots.txt file

What are robots?

- Search engines and shopping portal websites use robotic applications (sometimes called robots, bots, spiders, or crawlers) to index websites their users might want to see.

- If your website is found in search results on Google it is because Google had previously sent a robot to index your site.

Why do robots need to be controlled?

- When a robotic program indexes your website, it is much like a real person visiting it. While a real person may click on 5-10 pages during a typical visit, a robot may go through several hundred to several thousand pages in a very short period of time.

- At a certain level, too much robotic activity can put a serious strain on your website servers. Our hosting environment is set up to handle sudden spikes in website traffic. However, there are additional variable fees for websites with sustained high levels of traffic. Controlling unwanted robotic activity can help control costs associated with extra high traffic.

Are all robots bad?

- No, the most popular search engine robots are usually good for your business. Considering that 85% of all internet searches come from the big three search engines (Google, Yahoo & MSN) most retailers will want to let these robots crawl their websites.

- The remaining 15% of internet searches are spread across hundreds of smaller more obscure search engines and shopping aggregators.

- These smaller search portals deliver very little value to most retailers in terms of the number of actual consumer referrals (because very few people use them).

- Further, it has been demonstrated that the robots from these smaller search firms have a tendency to crawl websites more frequently and more rapidly than necessary in an effort to build up their own databases (using your data).

- If left unchecked, rogue robots can create higher operating costs for retailers while providing virtually zero value in terms of customer referrals.

- When a robot visits your website, the first thing it does is look for a special file called "robots.txt." This file contains a specific set of instructions that will tell the robot if it is allowed to crawl your website or not.

- If no robots.txt file exists, any robot visiting your site will assume that it can crawl your entire site. However, if a robots.txt file is present, the robot is supposed to follow the instructions found in that file.

- The default instructions found in the robots.txt file for all our sites basically say no robots can crawl this website unless the robot belongs to Google, Yahoo, or MSN.

- We provide you with tools to create further robot restrictions or to remove these restrictions.

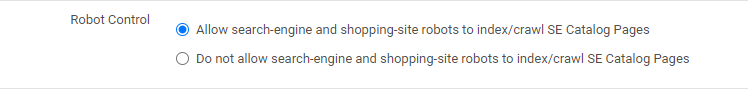

Robot Controls For Your Catalog

- The vast majority of pages found on our websites are the SE Catalog pages.

- Allowing or limiting the crawling of these sections can have a significant impact on the amount of robotic activity taking place on your website.

- To view/change settings navigate to Settings > Commerce > Catalog Settings > Robot Control.

Ultimately the choice is up to you as to whether you want search engine robots crawling your catalog pages. If you are not sure about what to do, please consult with a member of our Client Success Team about your current strategy regarding search engines.

Modifying The Robots.txt File

The tools outlined in the previous section can be used to tell Google, MSN, and Yahoo whether or not they can crawl your website catalogs. More precise control over robots can be achieved by uploading a file containing specific robot control instructions to your website.

- To create this file, start by opening a plain text editor like Notepad and naming the file "robots.txt."

- The contents of this new file depend on what your intentions are. Below are some sample scenarios.

Scenario 1 - Remove all robot restrictions:

User-agent: *

Disallow:

Scenario 2 - Remove specific robot restrictions:

User-agent: botname*

Disallow:*Note: you must use the actual name of robot for this to work

Scenario 3 - Give specific robots specific permissions:

User-agent: botname

Disallow: /bikespeak

Disallow: /nobots

Disallow: /page.cfm?pageId=XXX*Note: Replace XXX above with the actual PageID number on your website.

- After creating a robots.txt file (the file must be named "robots.txt") please contact a member of our Client Success team at support@smartetailing.com, and we can upload the new file for you.

The file you create will append to our default file. If you change a User-agent rule that we have already included in the default file, your rule will override the default, as the robots read the page top to bottom and your rule will be below our rule.